Biography

I'm currently a graduate student in the Master of Science in Intelligent Information Systems (MIIS) program at Carnegie Mellon University's Language Technologies Institute (LTI), School of Computer Science (SCS).

Previously, I have obtained my Bachelor's degree in Computer Science and Technology from Chu Kochen Honors College, Zhejiang University. I did research on time series classification supervised by Prof. Yang Yang. I also had wonderful research experiences in CHAI lab with Prof. Chenhao Tan at UChicago about evaluating long-form multimodal summarization generated by LLMs.

I'm looking for MLE/SE interns at Summer 2025!

- Natural Language Processing

- Large Language Models Design and Evaluation

- Detection and Mitigation of Hallucinations

- Data Analysis

-

Carnegie Mellon University

Master in MIIS, 08/2024 - 12/2025

-

Zhejiang University

Undergraduate in Computer Science and Technology, 09/2020 - 06/2024

News

- 2024.09: 🎉🎉 One paper about segmented time series classification was accepted by NeurIPS 2024!

- 2024.09: I start a new research on hallucination mitigation at Language Techonology Institute under the guidance of Maarten Sap!

- 2024.08: I start my master's program at School of Computer Science, Carnegie Mellon University!

- 2024.07: 🎉🎉 One paper about multimodal long-form summarization was accepted by COLM 2024. My first-author paper!

- 2024.06: 🎓 I graduated as the outstanding graduates from CKC Honors College, Zhejiang University.

Publications

Research and Experience

Safeguarding LLMs Against Hallucinations

- Developed reinforcement learning algorithms to ensure models remain factual

- Built synthetic hallucinated datasets for negative training examples

- Analyzed tradeoffs between factuality and utility across various domains

Characterizing Multimodal Long-form Summarization

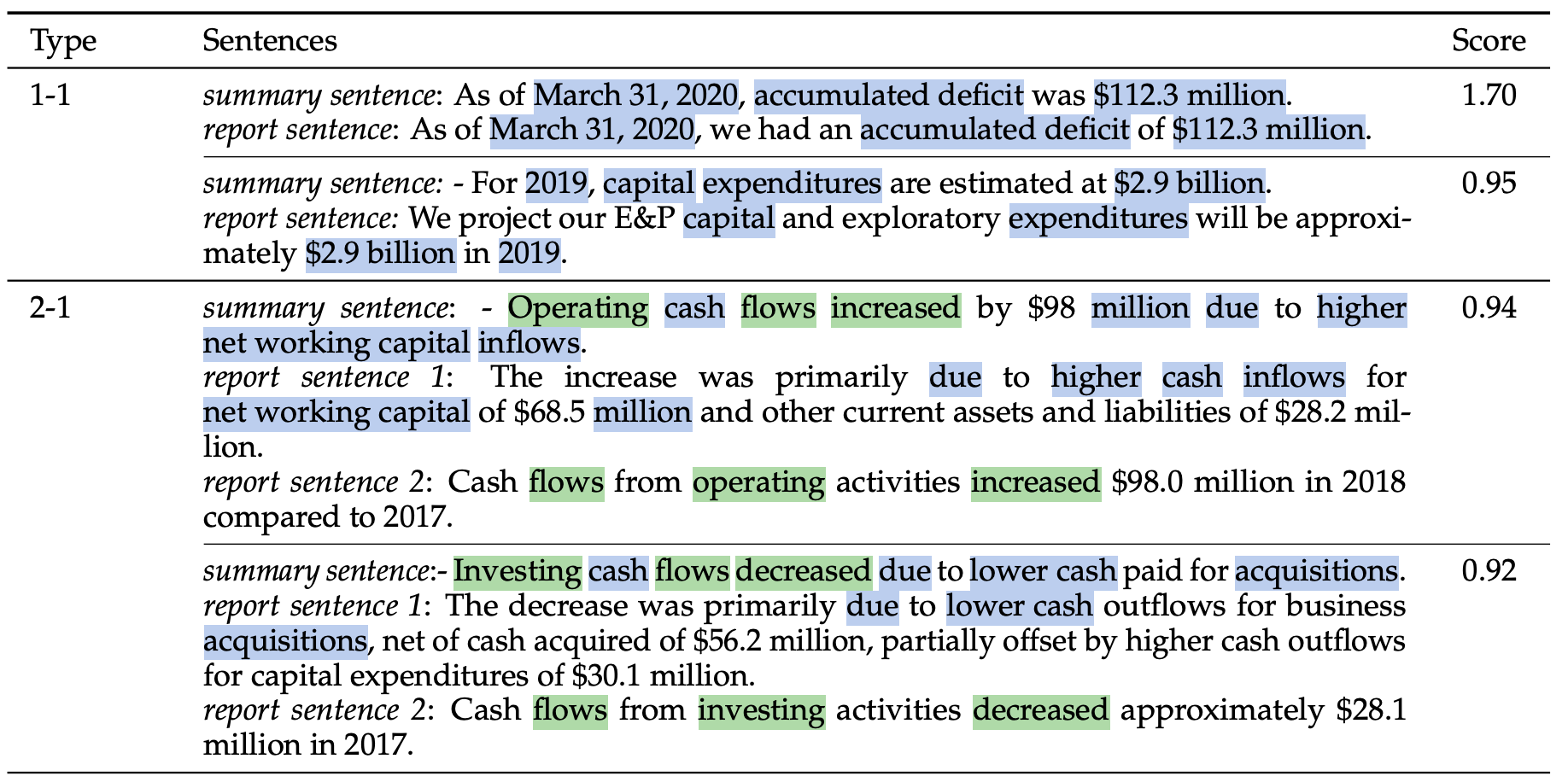

- Developed an evaluation framework for LLM-generated multimodal long-form financial report summaries, integrating textual and numeric analysis

- Demonstrated inability of GPT-3.5 and Cohere to perform such long-form multimodal summarization

- Compared the behavior of Claude 2.0/2.1 and GPT-4, identifying position bias and Claude's possible ability to recognize important information through shuffled report experiments

- Revealed Claude's superior numeric usage, with 8.37% of summary numbers from report tables vs. 4.98% for GPT-4

- Pioneered taxonomy of numeric hallucinations, identifying ~5% ratio and exploring prompt engineering solutions

Context-aware Consistency Learning Framework for Segmented Time Series Classification

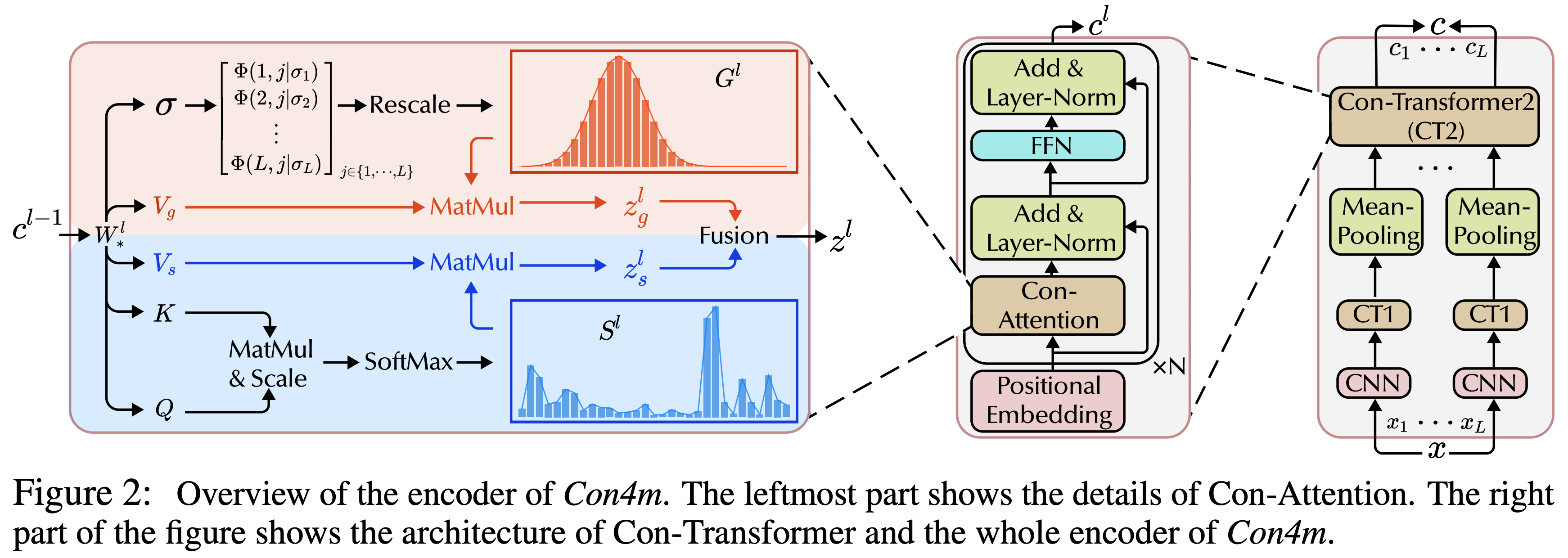

- Pioneered Con4m, a practical consistency learning framework for segmented TSC on raw time series with Multiple classes and Varying Durations (MVD)

- Integrated prior knowledge of data locality and label coherence, enhancing model focus on contextual information

- Designed a progressive harmonization approach, improving model robustness against inconsistent training labels

- Validated Con4m's superior performance through experiments on two public and one private MVD datasets

DNN for Reverse Design of Optical Devices

- Constructed Forward Neural Network (FNN) for simulation purposes to replace traditional EMF simulation

- Constructed TandemNet by combining FNN and Inverse Neural Network (INN) in a tandem structure to deal with the non-uniqueness problem of inverse design and solve black box problems

Honors

Skills

Python, C/C++, Java

PyTorch, NumPy, Pandas, NLTK, Matplotlib, Huggingface, Transformers

Volleyball, Swimming, Movies

Contact

- tianyuca@cs.cmu.edu

- 5000 Forbes Ave, Pittsburgh, PA 15213, USA